With this challenge, we made available a large dataset of spectral domain OCT scans containing a wide variety of retinal fluid with accompanying reference annotation. In addition, an evaluation framework has been designed to allow all the methods developed to be evaluated and compared with one another in a uniform manner.

RETOUCH Challenge consists of two Tasks:

- Detection of the presence

- Segmentation (voxel-wise)

of intraretinal fluid (IRF), subretinal fluid (SRF) and pigment epithelial detachment (PED). Please refer to the Background page for clinical details regarding the different fluid types.

Imaging Data

All OCT volumes are stored in ITK MetaImage format containing an ASCII readable header (oct.mhd ) and a separate raw image data file (oct.raw). Full documentation is available at www.itk.org/Wiki/MetaIO. An application that can read the data is SNAP (www.itksnap.org/). Note that in the header file you can find the dimensions and spacing of each volume. In the raw file the values for each voxel are stored consecutively with index running first over x, then y, then z.The B-scans go over the z-dimension (see Figure 3 in Background)

Reference Standard

The reference standard is obtained from manual voxel-wise annotations of the fluid lesions. Manual annotations tasks were distributed to human graders from two medical centers:

- Medical University of Vienna, Austria. There were 4 graders supervised by one ophthalmology resident, all trained by 2 retinal specialists.

- Radboud University Medical Center, Nijmegen, The Netherlands. There were 2 graders supervised by a retinal specialist.

Reference standard is stored as an image with the same size as the corresponding OCT with the following labels:

- Intraretinal Fluid (IRF)

- Subretinal Fluid (SRF)

- Pigment Epithelium Detachments (PED)

The numbers in front of the structures indicate the voxel-wise labels. Everything else is labeled as 0.

Such reference standard directly represents the presence, the amount, and the type of fluid inside the retina, which have direct clinical interpretation.

All reference standard images are stored in ITK MetaImage format containing an ASCII readable header (reference.mhd) and a separate raw image data file (reference.raw). Full documentation is available at www.itk.org/Wiki/MetaIO. An application that can read the data is SNAP (www.itksnap.org/). Note that in the header file you can find the dimensions and spacing of each volume. In the raw file the values for each voxel are stored consecutively with index running first over x, then y, then z.

Training and Test datasets

A training set with a total of 70 OCT volumes is provided, with 24, 24, and 22 volumes acquired with each of the three OCT devices Cirrus, Spectralis, and Topcon, respectively.

Test set consists of 42 OCT volumes, 14 volumes per OCT device manufacturer. The test set was annotated twice, once by each of the two medical centers. A single reference standard is created for the test set using consensus, i.e., a strict combination of the annotations from the two centers. A fluid was determined to be present or absent in a scan/voxel only when both centers agreed.

Submission Guidelines

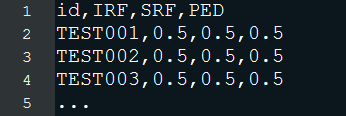

Challenge Task 1: Fluid Detection. The detection results should be provided by a single CSV file, with the first column corresponding to the id of the test OCT scan and the second and third columns containing the estimated probability (value from 0.0 to 1.0) of scan containing IRF, SRF and PED, respectively.

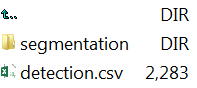

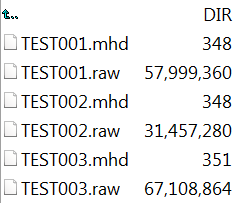

Challenge Task 2: Fluid Segmentation. The segmentation results should be provided as one image per test scan with the segmented voxels labeled in the same way as in the reference standard. Your submission files should be named accordingly to the OCT scan id and be written in the ITK MetaImage format (mhd and raw files), e.g., TEST001.mhd/raw.

For submission upload please compress the files into a ZIP archive. The achive should contain a file "detection.csv" and a folder "segmentation" (Figure below-left). The segmentation folder should contain the results saved under the filename corresponding to the id of the OCT scan (Figure below-right).

Evaluation Framework

This challenge evaluates the performance of the algorithms for fluid: (1) detection and (2) segmentation. Thus there will be two main leaderboards. The average score across the two leaderboards will determine the final ranking of the challenge. In case of a tie the segmentation score has the preference.

Detection results will be compared to the manual grading of fluid presence. For IRF, SRF and PED receiver operating curve will be created across all the test set images and an area under the curve (AUC) will be calculated. Each team receives a rank (1=best) for each of the fluid types based on the obtained AUC value. The score is determined by adding the three ranks. The team with the lowest score will be ranked #1 on the detection leaderboard.

Submitted segmentation results will be compared to the double manually annotated reference standard. the Dice index (DI), and the absolute volume difference (AVD) will be calculated as segmentation error measures. Voxels for which two annotations differ will be excluded from evaluation. Due to big image quality variability between OCT manufacturers segmentation results will be additionally summarized per each manufacturer separately. Thus, each team receives a rank (1=best) for each fluid type, OCT manufacturer and evaluation measure combination, based on the mean value of the evaluation measures over the corresponding set of test images. The score is then determined by adding the 18 individual ranks (3 fluid types x 3 manufacturers x 2 eval. measures). The team with the lowest score will be ranked #1 on the segmentation leaderboard.